Most explanations of “Data as a Product” stay at the conceptual level: ownership, contracts, and autonomy. That’s useful—but teams only internalize the pattern when they see a complete end-to-end flow working with real code.

In this post, I’ll walk through a tiny but fully functional demo that shows how two data products collaborate through an Output Port. The upstream product publishes a simple “surprise” dataset; the downstream product consumes it through a subscription and turns it into a visual.

It’s intentionally playful—but the mechanics are exactly the same you would use for real domain data.

The cast: two data products and one Output Port

Platform: Microsoft Fabric (Spark notebooks / Lakehouse style execution)

Upstream data product

- DataProductName:

dp-demo-fabric-ms-swisshealth-upstream - Responsibility: produce data and publish it via an Output Port

Output Port (the contract boundary)

- Outport:

op-demo-swisshealth-suprise - Includes:

- contract version (

1) - a stable “product interface” for consumers

- one-click / governed subscription (“Quicksubscribe”)

- contract version (

Downstream data product

- DataProductName:

dp-demo-fabric-ms-swisshealth-downnstream - Responsibility: subscribe, consume, create value downstream

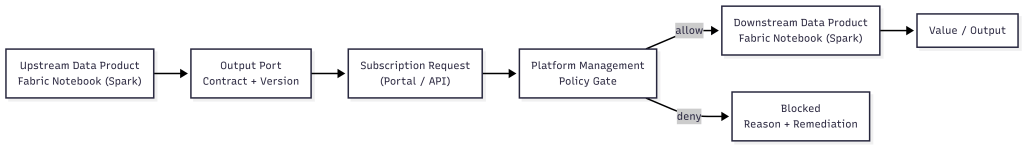

The mental model: contracts and decoupling

Think of the Output Port like a product API:

- The upstream team owns implementation details (how the data is computed).

- Consumers only depend on the contract (Output Port key + version + asset name).

- Versioning prevents accidental breaking changes.

- Subscriptions create explicit dependencies and governance control points.

Even in this tiny demo, the key idea holds: teams connect through contracts, not through internal tables.

Step 1 — Upstream: create and publish a “surprise” dataset

The upstream notebook generates a small pixel grid (a few rows and columns), converts it into a Spark DataFrame, and writes it to the Output Port as a Delta/Parquet-backed asset.

Upstream notebook code (producer)

import numpy as np

# Your surprise pixel grid

surprise_pixels = np.array([

[0,1,1,0,0,0,1,1,0],

[1,1,1,1,0,1,1,1,1],

[1,1,1,1,1,1,1,1,1],

[0,1,1,1,1,1,1,1,0],

[0,0,1,1,1,1,1,0,0],

[0,0,0,1,1,1,0,0,0]

])

# Convert to list of lists for Spark

surprise_list = surprise_pixels.tolist()

# Create column names for each pixel

num_cols = surprise_pixels.shape[1]

columns = [f'pixel_{i+1}' for i in range(num_cols)]

# Create Spark DataFrame

df_surprise = spark.createDataFrame(surprise_list, columns)

# Save name (logical asset name inside the outport)

table_name = "surprise_pixels"

# Write to Output Port

surprise_pq_writer = io.ParquetOutportWriter(

target_outport_key='op-demo-swisshealth-suprise',

target_version_nr='1'

)

surprise_pq_writer.write(df_surprise, table_name)

What happens conceptually:

- You create a dataset (

df_surprise). - You write it to the Output Port contract (

op-demo-swisshealth-suprise, version1). - The platform can now expose that contract for governed subscription.

Step 2 — Subscription: “Quicksubscribe” (consumer onboarding)

In your portal, the consumer subscribes to the Output Port:

- Producer:

dp-demo-fabric-ms-swisshealth-upstream - Output Port:

op-demo-swisshealth-suprise - Version:

1

This is a critical product-thinking moment:

- Subscription becomes a tracked dependency

- It can be checked by policies (e.g., medallion rules, access rules, cycle detection)

- It creates the foundation for operational signals (freshness, contract changes, incidents)

Step 3 — Downstream: read and turn the contract into value

The downstream notebook reads the output from the upstream product via the Output Port reader, converts it to pandas, and visualizes it using matplotlib.

Downstream notebook code (consumer)

surprise_pq_reader = io.ParquetOutportReader(

target_dataproduct_key='dp-demo-fabric-ms-swisshealth-upstream',

target_outport_key='op-demo-swisshealth-suprise',

target_version_nr='1',

target_stage='same'

)

df_surprise = surprise_pq_reader.read('surprise_pixels')

# Render the Spark DataFrame using matplotlib

pandas_surprise = df_surprise.toPandas()

import matplotlib.pyplot as plt

import numpy as np

pixels = pandas_surprise.to_numpy(dtype=int)

plt.figure(figsize=(6, 4))

plt.imshow(pixels, cmap='Reds')

plt.axis('off')

plt.title('Surprise')

plt.show()

What this demonstrates (beyond the fun output):

- The downstream product never touches upstream internals.

- The dependency is explicit: (upstream product + output port + version + asset name).

- You get a clean place to enforce governance rules and avoid spaghetti coupling.

Why this simple demo matters in real enterprise setups

Replace the pixel grid with:

- customer profile facts

- contract-level “gold” KPIs

- claims aggregates

- feature tables

- regulatory reporting slices

…and the pattern stays identical.

Key benefits you can call out:

- Autonomy with alignment: teams build independently but integrate through contracts

- Safe evolution: versioned Output Ports allow controlled change

- Observable dependencies: subscriptions become traceable edges in your product graph

- Governance without bottlenecks: platform enforces rules at subscription/publish time

Leave a comment