This article describes a pragmatic way to design a multi-server Model Context Protocol (MCP) architecture for an enterprise data platform.

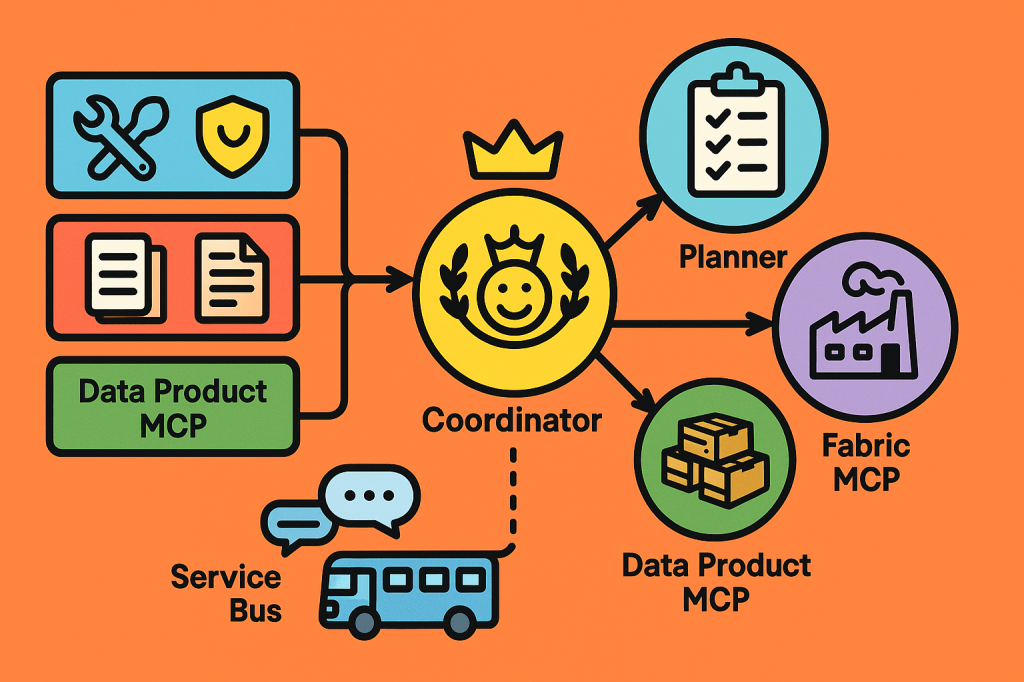

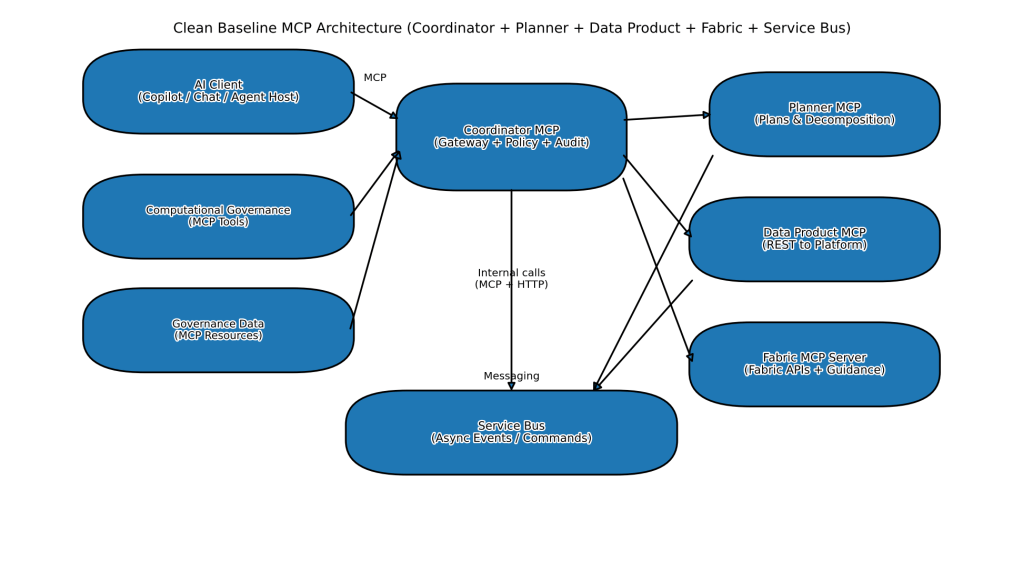

Where:

Computational governance is exposed as MCP tools (guardrails that can execute and enforce decisions).

Governance data is exposed as MCP resources (contracts, definitions, policies, lineage, SLOs).

Azure Service Bus is the messaging backbone for asynchronous and event-driven interactions.

You operate multiple MCP servers with clear responsibilities: Coordinator, Planner, and Data Product Connector. You also integrate Microsoft’s open-source Fabric MCP server to work with Microsoft Fabric APIs and best practices.

MCP itself is an open protocol intended to standardize how AI systems connect to tools and context, and it has a formal specification and reference documentation.

The guiding principle: separate “reasoning” from “authority”

In regulated, multi-domain environments, the key to “agentic” systems is to keep decisions explainable and enforceable:

The model can propose actions (plans). Your platform must authorize and execute actions (tools). Your governance must be the source of truth (resources).

That naturally maps to MCP:

Tools = capabilities with side-effects (validate, approve, provision, trigger, notify). Resources = read-only or versioned context (policy manifests, contracts, lineage, SLO definitions).

The target topology: Coordinator + Planner + Data Product MCP + Fabric MCP

A clean baseline architecture looks like this:

Why multiple MCP servers?

Because “one giant MCP server” becomes a monolith quickly. Separate servers let you: version and deploy independently, isolate credentials and blast radius, enforce least privilege, scale the high-traffic components (e.g., Data Product calls) without scaling planning logic.

Responsibilities by server

Coordinator MCP Server (the “control plane gateway”)

This is the single entrypoint the AI client connects to. It should focus on:

Tool routing (which downstream MCP server should handle a request) Policy enforcement (pre-checks before any side-effect) Context assembly (fetch relevant resources for planning and execution) Audit logging (every tool call, every resource read, every approval)

Typical Coordinator tools

governance.check_access(subject, dataproducId, purpose) governance.validate_contract_change(contractId, proposedSchemaHash) platform.create_subscription(dataproductId, consumerId, mode)

platform.trigger_refresh(dataproductId | outputPortId) notify.publish_event(type, payload, correlationId)Typical Coordinator resources

governance://policies/*

governance://glossary/*

governance://contracts/*

governance://lineage/*

governance://slo/*The Coordinator is also where you put hard enterprise constraints: data boundary checks, PII constraints, tenant/capacity restrictions, and “approval required” workflows.

Planner MCP Server (the “plan compiler”)

In MCP terms, a “Planner MCP server” is a server that exposes tools/resources that support planning. Practically, it should:

take an objective (“onboard consumer X to data product Y”), pull the necessary governance resources (definitions, constraints), output a structured plan that the Coordinator can execute stepwise.

Planner output should be deterministic and reviewable

Use a strict plan schema (steps, required tools, required resources, preconditions, rollback hints). Explicitly list which policies/constraints were used.

This keeps the model’s reasoning transparent and makes it easier to adopt approval gates (human-in-the-loop) for high-risk steps.

Data Product MCP Server (the “domain connector”)

This server is responsible for talking to data products and your platform’s domain-facing interfaces. You mentioned it should communicate via REST—that’s an excellent choice because it keeps it interoperable with non-MCP systems and aligns with “data product as API”.

Core duties:

Read product metadata and runtime signals (freshness, quality, record counts) Negotiate/validate contracts (schema hashes, versions) Publish events and status updates back into the ecosystem (via Service Bus) Share “product facts” into governance resources (or store pointers to them)

Examples:

dataproduct.get_contract(productId) dataproduct.get_latest_snapshot(productId) dataproduct.get_quality_report(productId, snapshotId) dataproduct.request_access(productId, consumerId, purpose) dataproduct.publish_snapshot_sealed(productId, snapshotId) → emits eventMicrosoft Fabric MCP Server (leverage open source instead of rebuilding)

Microsoft provides open-source MCP servers for Fabric-related access. Using them is strategically sound because you avoid implementing and maintaining:

Fabric public API wrappers, item definitions, platform best practices knowledge.

Two relevant starting points are:

Fabric MCP server repo (local-first MCP server for Fabric APIs and guidance). Microsoft’s broader MCP catalog repository.

Integrate Fabric MCP as a downstream server behind your Coordinator. That ensures Fabric actions still pass through your governance checks and audit trail.

Modeling computational governance as MCP tools

Computational governance becomes powerful when it is:

callable (tools), consistent (same checks everywhere), provable (returns evidence and references), event-aware (reacts to signals, not schedules).

A practical tool catalogue often includes:

Policy & compliance

policy.evaluate(request) → allow/deny + rationale + policy refs policy.require_approval(request) → creates approval task + tracking id

Quality & reliability

quality.check(snapshotId, rulesetId) → pass/fail + metrics slo.compute(eventStream) → SLO status, error budget consumption

FinOps & capacity guardrails

finops.estimate_cost(changeSet) → projected delta finops.enforce_budget(budgetId, request) → allow/deny with thresholds

Lineage & evidence

lineage.register(runId, inputs, outputs) evidence.attach(runId, artefacts) → stores pointers/hashes for audits

These should return structured outputs suitable for audits, not prose.

Governance data as MCP resources: “your agent’s ground truth”

Governance resources should be versioned and stable:

Contracts (schema, schema-hash, semantic version, deprecation policy) Definitions (business glossary, KPI meaning, “customer” definition by domain) Policies (PII tagging rules, retention, purpose limitation, approvals) Operational metadata (SLOs, runbooks, escalation paths, ownership)

Treat resources as:

cacheable traceable (resource URI + version) referencable in plans and tool outputs

This is what makes answers and actions defensible.

Service Bus as the event spine (signals over schedules)

Where MCP is great for synchronous tool calls, Service Bus is ideal for:

long-running workflows, retries and dead-lettering, decoupling producers and consumers, “fan-out” to multiple downstream consumers.

Microsoft also documents Azure MCP Server tooling for Service Bus operations (queues/topics/message inspection).

Recommended event types (examples)

SnapshotSealed (data product produced a versioned snapshot)

ContractChanged (new schema-hash/version available) QualityDegraded (SLO breach, anomaly)

AccessGranted / AccessRevoked CostAlert (budget threshold crossed)Operational essentials

Correlation IDs end-to-end Idempotency keys for tool calls that cause side-effects Dead-letter policies with runbook links as governance resources

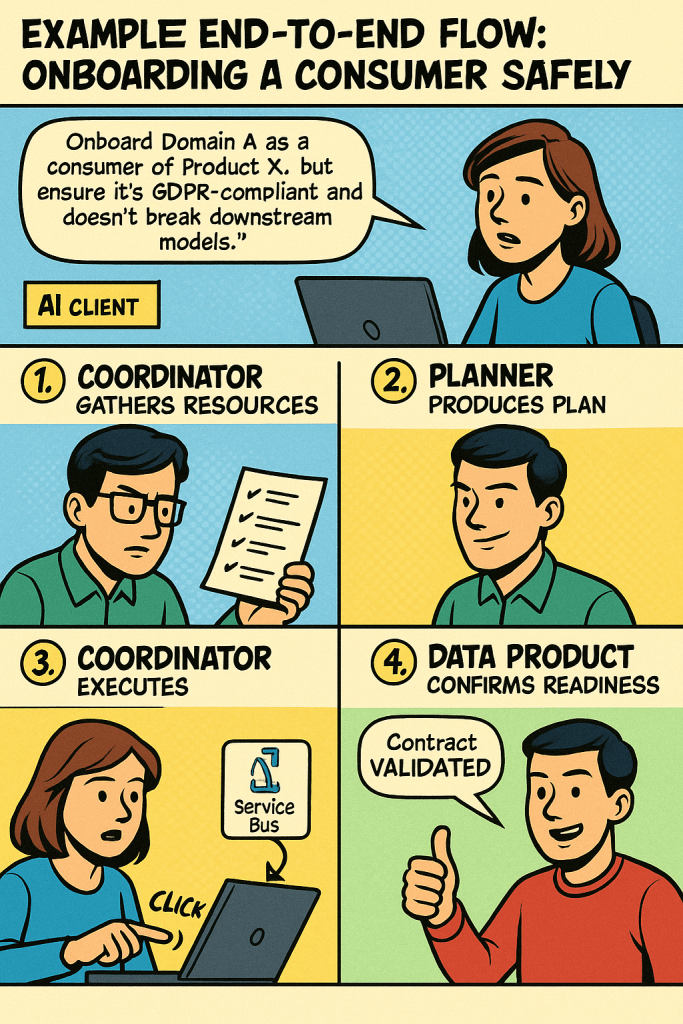

Example end-to-end flow: onboarding a consumer safely

User asks (AI client): “Onboard Domain A as a consumer of Product X, but ensure it’s GDPR-compliant and doesn’t break downstream models.”

Coordinator gathers resources:

contract + schema-hash PII tags + purpose limitation policy subscription policy SLOs and “required checks”

Planner produces plan:

step-by-step list of required checks and actions explicit preconditions (e.g., “PII requires approval”) rollback notes (“revoke subscription if quality gate fails”)

Coordinator executes:

runs policy.evaluate if needed, triggers policy.require_approval calls platform.create_subscription(…, mode=inactive_by_default) (a good default in regulated settings) notifies via Service Bus: AccessGranted or SubscriptionCreated

Data Product MCP confirms readiness:

validates contract compatibility shares product metadata back as governance evidence

Every step is logged with resource versions and tool outputs.

Security and operational guardrails you should design in from day one

Identity & auth

Front door: OIDC/OAuth to Coordinator East-west: mTLS + managed identity (or workload identity) per server Least privilege: each MCP server gets only the scopes it needs

Data protection

Resource-level access controls (governance resources are not universally readable) PII minimization (tools should return aggregates or references, not raw sensitive payloads)

Auditability

Immutable logs for: resource reads, tool calls, approvals, and emitted events Evidence pointers (hashes, run IDs, versions)

Reliability

Use Service Bus for long operations and retries Explicit timeouts and circuit breakers around REST calls to products/platform

A pragmatic implementation plan

Start with resource taxonomy (contracts/policies/lineage/SLO/runbooks). Define the tool catalogue for computational governance (evaluate, approve, validate, provision, notify). Implement the Coordinator as the only public MCP endpoint. Add the Planner once you have stable resources/tools to plan against. Add the Data Product MCP to standardize product interactions (REST) and event emission. Integrate Microsoft Fabric MCP server behind the Coordinator for Fabric operations and best-practice context.

Leave a comment